Recently, I encountered and resolved several issues that were causing job failures and instability in AWS-based GitLab Runner setup on Kubernetes. In this post, I’ll walk through the errors, their causes, and the solutions that worked for me.

Job Timeout While Waiting for Pod to Start

ERROR: Job failed (system failure): prepare environment: waiting for pod running: timed out waiting for pod to start

Adjusting poll_interval and poll_timeout helped resolve the issue:

- poll_timeout (default: 180s) – The maximum time the runner waits before timing out while connecting to a newly created pod.

- poll_interval (default: 3s) – How frequently the runner checks the pod’s status.

By increasing poll_timeout (180s to 360s), the runner allowed more time for the pod to start, preventing such failures.

ErrImagePull: pull QPS exceeded

When the GitLab Runner starts multiple jobs that require pulling the same images (e.g., for services and builders), it can exceed the kubelet’s default pull rate limits:

- registryPullQPS (default: 5) – Limits the number of image pulls per second.

- registryBurst (default: 10) – Allows temporary bursts above registryPullQPS.

Instead of modifying kubelet parameters, I resolved this issue by changing the runner’s image pull policy from always to if-not-present to prevent unnecessary pulls:

pull_policy = ["if-not-present"] # default one

allowed_pull_policies = ["always", "if-not-present"] # allow to set always from pipeline if necessary

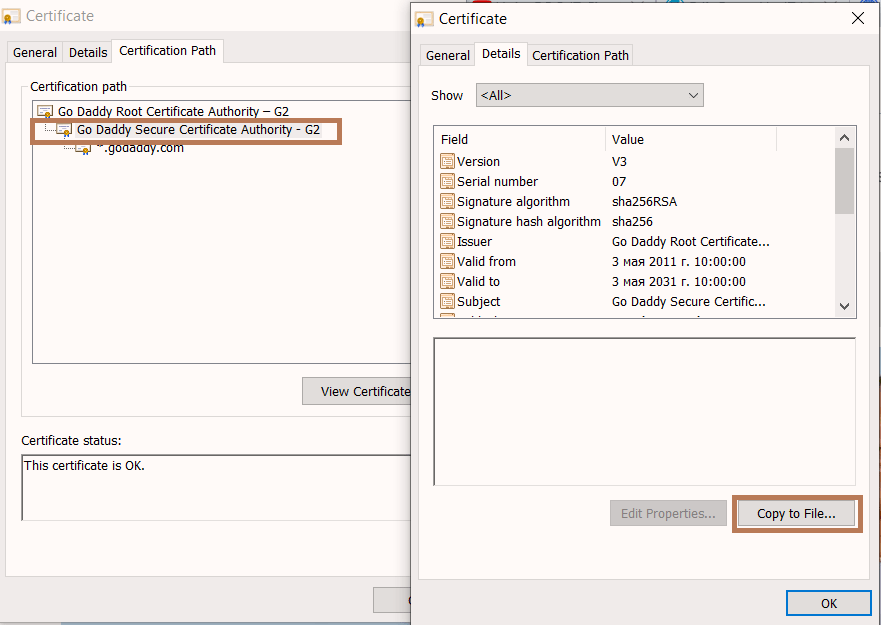

TLS Error When Preparing Environment

ERROR: Job failed (system failure): prepare environment: setting up trapping scripts on emptyDir: error dialing backend: remote error: tls: internal error

GitLab Runner communicates securely with the Kubernetes API to create executor pods. A TLS failure can occur due to API slowness, network issues, or misconfigured certificates

Setting the feature flag FF_WAIT_FOR_POD_TO_BE_REACHABLE to true helped resolve the issue by ensuring that the runner waits until the pod is fully reachable before proceeding. This can be set in the GitLab Runner configuration:

[runners.feature_flags]

FF_WAIT_FOR_POD_TO_BE_REACHABLE = true

DNS Timeouts

dial tcp: lookup on <coredns ip>:53: read udp i/o timeout

While CoreDNS logs and network communication appeared normal, there was an unexpected spike in DNS load after launching more GitLab jobs than usual.

Scaling the CoreDNS deployment resolved the issue. Ideally, enabling automatic DNS horizontal autoscaling is preferred for handling load variations (check out kubernetes docs or cloud provider’s specific solution: they all share the same approach – add more replicas if increased load occurs)

kubectl scale deployments -n kube-system coredns --replicas=4

If you encountered other GitLab Runner issues, share them in comments 🙂

Cheers!