Hi, folks!

At the recent Ignite 2015 sessions Microsoft officially announced System Center 2016 and Windows Server 2016 new features. Of course, this is not complete list of all features that will be added to WS 2016 RTM. Anyway, we now can say MS has done great work. Here are the some major new improvements.

Note: Windows Server 2016 is already available for evaluation

UPDATE 06/26: added “real” pictures for checkpoints, shared vhdx and hyper-v manager alternate credentials + link to the recently published “Shielded VMs and Guarded Fabric Validation Guide for Windows Server 2016” and videos from Microsoft Storage Team about Storage Replica

Update 09/07:

- Workgroup and multi-domain clusters in TP3

- MS released TP3

- Containers Preview are available

- Affinity for cluster sites (site-aware failover clusters in WS 2016)

- Data deduplication for nano server

Update 10/14:

Early preview of Nested virtualization is available in Windows 10 Build 10565

Update 11/19:

System Center 2016 TP4 Evaluation VHDs are available ( I guess with Server 2016 TP4 inside!)

Windows Server 2016 TP4 is going to be officially released next week! RTM is coming! Windows Server 2016 Tp4 is available to download!

Update 11/25-11/27:

Added new TP4 features

Nested Virtualization in Windows Server 2016

Update 12/4:

Windows Server 2016 Licensing and Pricing

Update 05/26:

Windows Server 2016 TP5 is available

Update 07/13:

Launch Dates for Windows Server 2016

Update 08/26:

Significant Scale Changes in Windows Server 2016

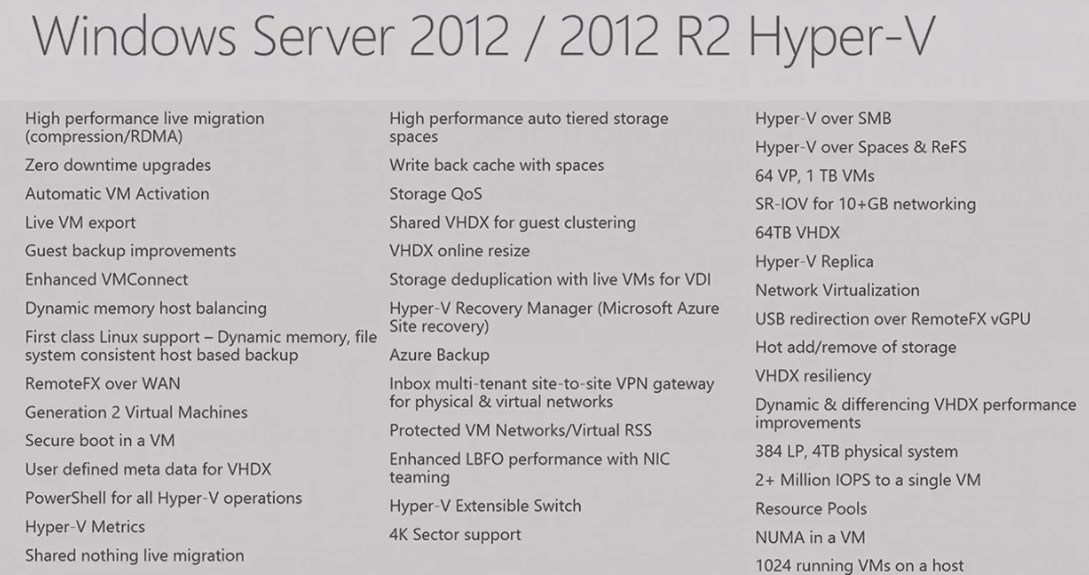

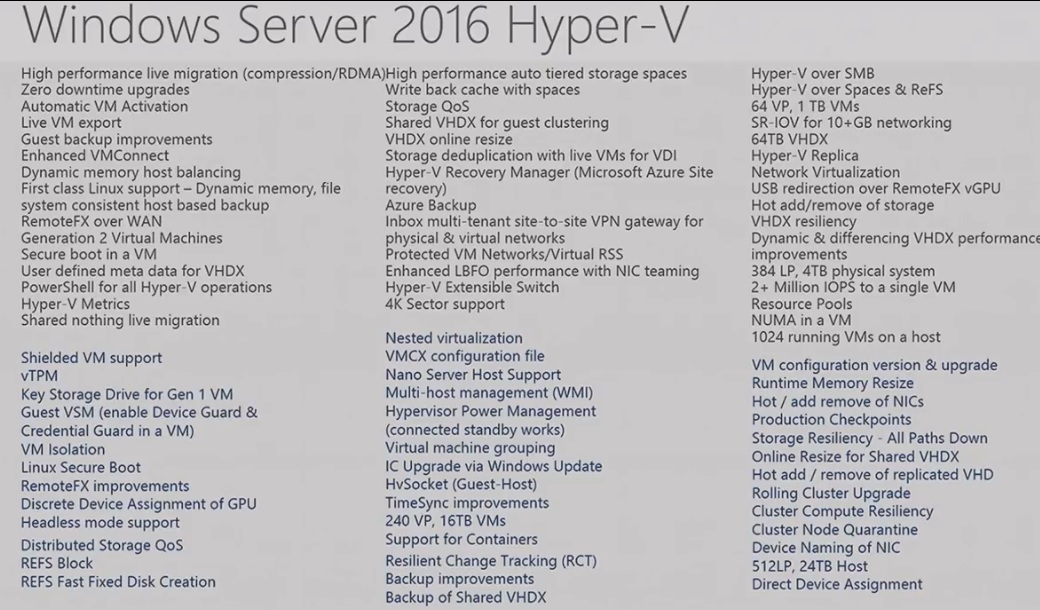

Comparison of the 2012/2012R2 and 2016

2012/2012R2:

2016:

Cluster OS Rolling Upgrade

You can now upgrade your existing Server 2012 R2 Hyper-V cluster to Server 2016 without downtime. Cluster can operate with a mix of OS and you have downgrade options until you update cluster functional level. Once you update the cluster functional level to Server 2016 you don’t have any way to turn back it to 2012 R2. See my post for more information: Step-By-Step: Cluster OS Rolling Upgrade in Windows Server Technical Preview

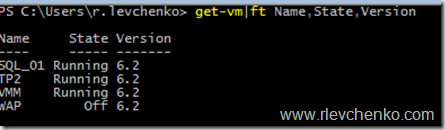

Virtual Machine Configuration Version

When you move or import a virtual machine to a server running Hyper-V on Windows Server 2016 from Windows Server 2012 R2, the virtual machine’s configuration file is not automatically upgraded. This allows the virtual machine to be moved back to a server running Windows Server 2012 R2. You will not have access to new virtual machine features until you manually update the virtual machine configuration version.

The virtual machine configuration version represents what version of Hyper-V the virtual machine’s configuration, saved state, and snapshot files it is compatible with.

Server 2012 R2 has VM configurations version 5

Server 2016 TP1 – version 6.0

Server 2016 TP2/Tp3 – version 6.2

Server 2016 TP 4 – version 7.0

Server 2016 TP5 – version 7.1

Server 2016 RTM – version 8.0

Virtual machines with configuration version 5 are compatible with Windows Server 2012 R2 and can run on both Windows Server 2012 R2 and Windows Server 2016. Virtual machines with configuration version 6 and later are compatible with Windows Server 2016 and will not run on Hyper-V running on Windows Server 2012 R2

To check VM configuration version:

Get-VM|ft Name,Version

TP4:

To update configuration version (for TP4. In TP5 cmdlet has been changed to “Update-VMVersion”):

Update-VmConfigurationVersion vmname

#Get all VMs in cluster and update configuration versions

Get-ClusterGroup|? {$_.GroupType -EQ "VirtualMachine"}|Get-VM|Update-VMConfigurationVersion

Confirm

Are you sure you want to perform this action?

Performing a configuration version update of "New Virtual Machine" will prevent it from being migrated to or imported on previous versions of Windows. This operation is not reversible.

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is "Y": a

Discrete Device Assignment

In Windows Server 2012 R2 there is a similar feature called “SR-IOV” basically uses to pass-through network adapters to guest. In Windows Server 2016 TP4 Microsoft announced “Discrete Device Assignment” that allows you to take some of officially (list will be available later) supported PCI Express devices and present them to VMs (it’s recommended to use only DDA supported devices in production)

DDA is based on SR-IOV technology as well. The main benefit of DDA is faster access to device from guest that may be needed in some scenarios where performance is critical.

Windows Server 2016 will allow NVMe devices and GPUs (graphics processors) to be assigned to guest VMs. Other devices such as USB/RAID/SAS controllers may work with DDA and some of them have been tested by Microsoft but we need to wait official support confirmation.

PowerShell cmdlets related with DDA:

- Add-VMAssignableDevice

- Add-VMHostAssignableDevice

- Dismount-VMHostAssignableDevice

- Get-VMAssignableDevice

- Get-VMHostAssignableDevice

- Mount-VMHostAssignableDevice

- Remove-VMAssignableDevice

- Remove-VMHostAssignableDevice

Host DDA requirements are similar to the SR-IOV virtualization:

- The processor must have either Intel’s Extended Page Table (EPT) or AMD’s Nested Page Table (NPT).

- The chipset must have:

- Interrupt remapping: Intel’s VT-d with the Interrupt Remapping capability (VT-d2) or any version of AMD I/O Memory Management Unit (I/O MMU).

- DMA remapping: Intel’s VT-d with Queued Invalidations or any AMD I/O MMU.

- Access control services (ACS) on PCI Express root ports.

- The firmware tables must expose the I/O MMU to the Windows hypervisor (this feature might be turned off in the UEFI or BIOS). For instructions, see the hardware documentation or contact your hardware manufacturer.

- Devices need GPU or non-volatile memory express (NVMe). For GPU, only certain devices support discrete device assignment. To verify, see the hardware documentation or contact your hardware manufacturer.

For detailed information use the following links:

New file format of VM configuration files

In Windows Server 2012 R2 Hyper-V operates with:

- XML – VM configuration file

- BIN – snapshot placeholder (contents virtual machine memory)

- VSV – virtual machine saved state file

- VHD/VHDX – virtual hard disks

- AVHD/AVHDX – hard disks for snapshots

- + files for Smart Paging and Hyper-V replica

Microsoft decided to change configuration file (XML) and BIN + VSV extensions corresponding to VMCX (Virtual Machine Configuration) and VMRS (Virtual Machine Runtime State).New files are also more resistant to storage corruption and more effective in r/w changes to VM configuration.

It was and is not supported to edit XML configuration files (but everyone does). Bad news for someone – VMCX/VMRS are binary.

Linux Secure Boot

Secure Boot is a feature that helps prevent unauthorized firmware, operating systems, or UEFI drivers (also known as option ROMs) from running at boot time. Secure boot is enabled by default. Finally, Linux operating systems running on generation 2 virtual machines can now boot with the secure boot option enabled. Ubuntu 14.04 and later, and SUSE Linux Enterprise Server 12, are enabled for secure boot on hosts running Windows Server 2016 TP. Before you boot the virtual machine for the first time, you must specify that the virtual machine should use the Microsoft UEFI Certificate Authority. To enable Secure Boot use PowerShell:

Set-VMFirmware vmname -SecureBootTemplate MicrosoftUEFICertificateAuthority

Built-In Change Tracking

Backup vendor partners had to go out and write their own kernel mode filesystem filters in order to do block change tracking because WS2012/R2 have no such capability in the platform. Change tracking has been added to Windows Server 2016 and now backup solutions can utilize all it’s features without writing additional filters. So built-in change tracking helps to get a more efficient and reliable way of backing up Hyper-V environment.

![]()

New networking features

- Switch embedded teaming (SET). SET integrates some of the NIC Teaming functionality into the Hyper-V Virtual Switch. SET allows you to group between one and eight physical Ethernet network adapters into one or more software-based virtual network adapters. These virtual network adapters provide fast performance and fault tolerance in the event of a network adapter failure. SET member network adapters must all be installed in the same physical Hyper-V host to be placed in a team.

New-VMSwitch -Name SET -NetAdapterName "NIC1","NIC2" -EnableEmbeddedTeaming $true

- In Windows Server 2016, you can use fewer network adapters while using RDMA with or without SET (in Windows Server 2012 R2, using both RDMA and Hyper-V on the same computer as the network adapters that provide RDMA services can not be bound to a Hyper-V Virtual Switch and this increases the number of physical network adapters that are required to be installed in the Hyper-V host)

- Virtual machine multi queues (VMMQ). Improves on VMQ throughput by allocating multiple hardware queues per virtual machine. The default queue becomes a set of queues for a virtual machine, and traffic is spread between the queues.

- Quality of service (QoS) for software-defined networks. Manages the default class of traffic through the virtual switch within the default class bandwidth.

New Hyper-V Switch Type

In the previous Windows Server versions we have the following switch types: Private, Internal and External. The things are changing.

Latest Windows Server 2016 and Windows 10 builds bring to us new switch type – NAT. NAT switch was used in Containers as well (In TP3 you needs to configure Containers Host using the script New-ContainerHost.ps1 that creates NAT Switch by default). NAT Switch allows Virtual Machines that are located in internal subnet to have an external access through NAT (Network Access Translation).

Because of there are no any options in GUI you have to use powershell to create and manage Hyper-V NAT Switch.

- New-VMSwitch : creates Hyper-V Switch with Switch Type NAT

- New-NetNat : adds new required NAT object (NIC) with reserverd IP x.x.x.1 (you can see one in ncpa.cpl)

- Connect vNICs to switch and assign IP address from defined subnet with default gateway x.x.x.1 (172.34.3.1 in my case) then

UPDATE: in Windows Server 2016 Technical Preview 5 and RTM the “NAT” VM Switch Type has been removed to resolve layering violation.But it can be still done by using the following operations:

- Create an Internal VM Switch

- Create a Private IP network for NAT

- Assign the default gateway IP address of the private network to the internal VM switch Management Host vNIC

More info: What Happened to the “NAT” VMSwitch?

Hot add and remove for network adapters and static memory

You can now add or remove a Network Adapter while the virtual machine is running, without incurring downtime. This works for generation 2 virtual machines running both Windows and Linux operating systems.

You can also adjust the amount of memory assigned to a virtual machine while it is running. This works for both generation 1 and generation 2 virtual machines for ALL types of memory even if it’s Static!

Hot add and remove network adapter to all running VMs (Degraded status for SQL_01 and VMM VMs is because of integration services. I have not upgraded IS yet on these VMs)

Storage Spaces Direct

The huge update to Hyper-V related services – Storage Spaces In the previous Windows Server there are some ways to provide shared storage to hyper-v cluster: SMB, iSCSI, FC, Storage Spaces with JBOD (just bunch of disks) . I had a lot of customers who wanted just use their local storage and share it between hosts. With 3d part software (Starwind, for example) it’s possible with at least 2 nodes. Now you have another option : Storage Spaces Direct (S2D). S2D provides ability to create shared storage with internal disks as shown on the picture below. To evaluate Storage Spaces Direct in Windows Server, the simplest deployment is to use at least four (!) generation 2 Hyper-V virtual machines with at least two data disks per virtual machine. MS has done great improvement in their own SDS (Software Defined Storage).

Update: S2D in 2016 RTM requires from 2 to 16 servers with local-attached SATA, SAS, or NVMe drives. Each server must have at least 2 solid-state drives, and at least 4 additional drives. The SATA and SAS devices should be behind a host-bus adapter (HBA) and SAS expander.

One magic line to enable software storage bus:

# Enable Cluster S2D Enable-ClusterStorageSpacesDirect

For additional info: https://msdn.microsoft.com/en-us/library/mt126109.aspx

Storage Replica

I had a customer who wanted to create a stretched cluster between 2 sites with existing IBM SANs. There were some ways to achieve it : buy additional licenses to SANs (from IBM world : remote copy and flash copy) or upgrade to higher model with advanced features on board. The cost of that solution was surprisingly high.

But now you have alternative from MS. New feature is called Storage Replica. It allows you to create replica between hyper-v standalone hosts or between clusters (including site-to-site). Storage Replica is hardware agnostic , provides more effective solution for DR (Disaster Recovery), use SMB3 for transport and supports both Synchronous/Asynchronous types or replication!

More info: https://technet.microsoft.com/en-us/library/mt126183.aspx

+ see post https://rlevchenko.com/2015/06/11/storage-team-at-microsoft-storage-replica-videos/

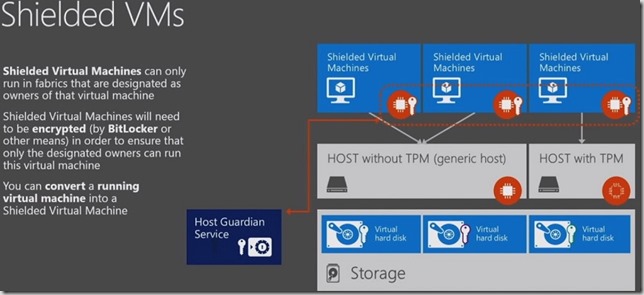

Shielded VMs

Shielded Virtual Machines can only run in fabrics that are designated as owners of that virtual machine. Shielded Virtual Machines will need to be encrypted (by BitLocker or other means) in order to ensure that only designated owners can run this virtual machine. You can convert a running virtual machine into a Shielded Virtual Machine. Works side by side with Host Guardian Service and TPM.

UPDATE: Shielded VMs and Guarded Fabric Validation Guide for Windows Server 2016

Changes in Storage Quality of Service

Storage QoS was introduced in Windows Server 2012 R2. You can set maximum and minimum IOPS thresholds for virtual hard disks (excluding shared virtual hard disks).

It works great on stand alone Hyper-V hosts but If you have cluster with a lot of virtual machines or even tenants It can be complicated to achieve right QoS for all cluster resources.

Storage QoS in the upcoming Windows Server 2016 provides a way to centrally monitor and manage storage performance for virtual machines. The feature automatically improves storage resource fairness between multiple virtual machines using the same file server cluster. In other words , QoS for Storage will be distributed between group of virtual machines and virtual hard disks.

Windows Server 2016 TP2 Storage QoS demo: Managing policies with VMM by Jose Baretto

https://www.youtube.com/watch?v=vQntxcztb6w

Windows Server 2012 TP2 Storage QoS demo: Managing policies with PowerShell by Jose Baretto

https://www.youtube.com/watch?v=RhPPfPW6EM8

Virtual Machine Cluster and Storage Resiliency

One of my favorite new feature (yes..it’s not Hyper-V itself. it’s a new cool feature in WSFC…but who is using Hyper-V without cluster? :))

If you have highly available VM and host becomes unresponsive host goes into isolation mode for 4 minutes (by default) but all VMs on this host will be running during this period. If host becomes responsive in this time frame (4 minute) isolation mode goes away and node operates normally. In case when host switches quite often between normal and isolation modes – this host marks as quarantined and all VMs migrate to the another (healthy) node in cluster.

Another great feature – VM Storage Resiliency. Storage fabric outage no longer means that virtual machines crash. In case of storage failure, virtual machines pause and resume automatically in response to storage fabric problems.

Note: In Windows Server 2012 R2 we have only automatic failover of VMs if host becomes unresponsive

Replica support for hot add of VHDX

Hyper-V Replica was announced in Windows Server 2012 and was updated in 2012 R2.There was disgusting restriction (personally for me) : you cannot hot add or remove newly added VHDX to VMs to existing Replica. In this case, there is the only 1 option for you: reconfigure replication. Bad way..

In Windows Server 2016 Hyper-V Replica allows you to add new VHDX without any panic. When you add a new virtual hard disk to a virtual machine that is being replicated – it is automatically added to the non-replicated set. This set you can update online through PowerShell:

Set-VMReplication VMName -ReplicatedDisks (Get-VMHardDiskDrive VMName)

Host Resource Protection

This feature has come from Azure. Now Hyper-V can automatically detect Virtual Machines which are attacking fabric (trying to overhead virtual resources) and reduce resource allocation to “bad” VMs

Production Checkpoints

In prior version there was only 1 type of checkpoint that were not supported in production environments and by many server applications. Microsoft introduces new type of checkpoints – production checkpoints.

It is enabled by default in Windows Server 2016 and provides ability to create snapshots through VSS instead of Saved State. You can switch between production and standard checkpoints

Nested Virtualization

The most awaited feature from fist time when first Hyper-V version was released. Ability to run VMs inside VMs was required by developers, MVPs, MCTs and other people (nowadays we use VMware for nested). And now Microsoft officially announced that nested virtualization will be a part of Windows Server 2016. Here is user voice thread and answer from MS: http://windowsserver.uservoice.com/forums/295050-virtualization/suggestions/7955529-nested-hypervisor

UPD: Early preview of Nested virtualization is available in Windows 10 Build 10565

UPD: nested virtualization has been officially introduced in WS TP4. Please read my post Nested Virtualization in Windows Server 2016

ReFS Accelerated VHDX Operations

Creating fixed size of VHDX in Windows Server 2012 R2 and prior versions can take a lot of time . It’s because of it writes all zero block to storage too (ODX can help you but not for 100%). In Windows Server 2016 if you create a fixed size VHDX on a ReFS volume, creation of fixed VHDX takes a more time less than identical operation in prior Windows Server ( for example, creation of 1 Gb VHDX on 2012 R2 takes ~72 seconds, this operation in 2016 and ReFS – 4.7 seconds; If you create 50 Gb fixed disk in 2016 and ReFs it takes 3.9 seconds. In Windows Server 2012 R2 it could take hours!). This works with merging operation too.

Virtual TPM

Windows Server 2016 Hyper-V allows you encrypt your VMs using Windows Server BitLocker (through Virtual Trusted Platform Module chip added inside VM). Another great feature to get the most securest cloud infrastructure. Customers can secure their VMs by BitLocker and don’t be afraid about privacy.

Key Storage Drive for Generation 1 VMs

vTPM described earlier allows us to use BitLocker inside Gen2 VMs. But..what about gen1? Windows Server 2016 provides a way to safely use BitLocker inside a generation one virtual machine as well. This solution is called “Key Storage Drive”. I’ll add more info later.

Shared VHDX

There are some limitations for Virtual Machines which are using shared vhdx in Windows Server 2012 R2. These restrictions in Windows Server 2016 becomes more insignificant.

- Now you can use host-based backup for shared VHDX clusters as easily as virtual machines (in 2012 R2 recommendation was to use guest agent inside guests)

- You can do online resizing of shared VHDX even when guest cluster is running

- New format file for shared VHDX (VHDS, Virtual Hard Disk Set). This format only for shared virtual hard disks, and enables backup of virtual machine groups using shared virtual VHD. This format is not supported in operating system earlier Windows 10 and WS TP (2016).

PowerShell Direct

Now you can manage and execute PowerShell inside guest’s OS remotely (from parent host) through VMBus .

No network connection is required! It’s just awesome!

Note: Works only from Windows 10/ Windows Server 2016 hosts to Windows 10/Windows Server 2016 guests

RemoteFX improvements

In Windows Server 2012 R2, the RemoteFX video adapter has a limitation of 256MB for the maximum amount of dedicated VRAM it exposed, OpenGL 1.1 (!!) and no support for OpenCL.

In real world RemoteFX in 2012 R2 is not suitable for modern applications whether it’s Autocad Re-Cap (OpenGL 3.3, 1Gb VRAM is required) or Photoshop (CC requires OpenGL 2.0 and 512 MB VRAM at least).

Microsoft has got that there is no time to lose and has updated RemoteFX adapter with some VRAM new capabilities which can brake some limiting factors:

- A larger dedicated VRAM amount (currently up to 1GB) – A VM can now be configured to obtain up to 1GB of dedicated video memory. Depending on the amount of system memory assigned to the VM, this can provide up to a total of 2GB of VRAM (1GB dedicated and 1GB shared (I need to get some more info about it. I’ve never seen this on official slides ..only @msrdsblog)

- Configurable dedicated VRAM – Previously, VRAM was set for a VM dynamically based on the number of monitors and resolution configured for a VM, this association has been removed and now dedicated VRAM can be configured independent of a VM’s number of monitors or resolution. This can be configured using a PowerShell cmdlets in the technical preview.

- OpenGL 4.4 and OpenCL 1.1 API Support

These settings can be configured by PowerShell:

NAME

Set-VMRemoteFx3dVideoAdapter

SYNTAX

Set-VMRemoteFx3dVideoAdapter [-VM] <VirtualMachine[]> [[-MonitorCount] <byte>] [[-MaximumResolution] <string>]

[[-VRAMSizeBytes] <uint64>] [-Passthru] [-WhatIf] [-Confirm] [<CommonParameters>]

RemoteFX can be used in new personal session desktops as well. Please check out my post What’s new in RDS Windows Server 2016?

Integration Services delivered through Windows Update

Integration components are sets of drivers and services that help your virtual machines have a more consistent state and perform better by enabling the guest to use synthetic devices

Previously, to update Integration Services in VM you needed to insert ISO with integration services via “Insert Integration Services Disk” action into VM and manually install it +restart VM. Now there is no integration services disk and all Hyper-V components required to be updated inside VM are delivered by Windows Update. This new behavior adds more flexibility in large environments (you can distribute updates through WSUS). For information about integration services for Linux guests, see Linux and FreeBSD Virtual Machines on Hyper-V .

Integration component updates are listed as Important and have KB with index 3004908 (in the future this can be changed)

Hyper-V Manager Improvements

- Alternate credentials support – you can now use a different set of credentials in Hyper-V manager when connecting to another Windows Server 2016. You can also choose to save these credentials to make it easier to log on again later. + you can connect by IP address

- Down-level management – you can now use Hyper-V manager to manage more versions of Hyper-V. With Hyper-V manager in the Windows Server 2016, you can manage computers running Hyper-V on Windows Server 2012, Windows 8, Windows Server 2012 R2 and Windows 8.1.

- Updated management protocol – Hyper-V manager has been updated to communicate with remote Hyper-V hosts using the WS-MAN protocol, which permits CredSSP, Kerberos or NTLM authentication. Using CredSSP to connect to a remote Hyper-V host allows you to perform a live migration without first enabling constrained delegation in Active Directory. Moving to the WS-MAN-based infrastructure also simplifies the configuration necessary to enable a host for remote management because WS-MAN connects over port 80, which is open by default.

- Improved Hyper-V Cluster Management (example: get-vm -computername “cluster name” from hyper-v host will output all VMs in cluster)

New options in installation wizard

There is no option to install Windows Server with full GUI.

..(with local admin tools) is equal to Windows Server with minshell (servermanager..)

Here is a user voice (please vote or post your thoughts! MS checks this!) to turn back an option with GUI

Update: Microsoft added GUI back . Your voices have been heard. Since TP3 release you have ability to install Windows Server with full GUI (more than just local admin tools in TP1/TP2)

Another new installation option is NanoServer . It is the minimal footprint installation option of Windows Server that is highly optimized for the cloud.

- NanoServer has 93 percent lower VHD size,

- 92 percent fewer critical bulletins and requires 80 percent fewer reboots than a typical Windows Server

- It takes 3 minutes to install NanoServer from bare metal

- UPD 09/07: data deduplication is fully supported in nano server in non-clustered environments (preview status, TP3 is required). Deduplication job cancellation must be done manually (using the Stop-DedupJob)

UPD: Nano Server has been updated in TP4 . Now you can install IIS and DNS server roles in addition to Hyper-V and Scale-Out (in cluster or not)

Hyper-V Containers

Last October, Microsoft and Docker, Inc. jointly announced plans to bring containers to developers across the Docker and Windows ecosystems via Windows Server Containers, available in the next version of Windows Server. While Hyper-V containers offer an additional deployment option between Windows Server Containers and the Hyper-V virtual machine, you will be able to deploy them using the same development, programming and management tools you would use for Windows Server Containers. In addition, applications developed for Windows Server Containers can be deployed as a Hyper-V Container without modification, providing greater flexibility for operators who need to choose degrees of density, agility, and isolation in a multi-platform, multi-application environment.

UPD: Hyper-V containers are available in TP4!

UPD: Docker commercial engine is available at Windows Server 2016 without any additional charge!

SLAT Requirement

Please note before migration or upgrading. Current TPs and future RTM versions of Windows Server 2016 requires SLAT (EPT for Intel, RVI for AMD) compatible CPU.

Previously, it was requirement only to Hyper-V on client OS (Windows 8/8.1).

Connected Standby support

Starting with Windows 8 and Windows 8.1, connected standby is a new low-power state that features extremely low power consumption while maintaining Internet connectivity.

Connected standby has multiple benefits to the user over the experience that traditional ACPI Sleep (S3) and Hibernate (S4) states deliver. The most prominent benefit is instant resume from sleep. Connected standby PCs resume extremely quickly—typically, in less than 500 milliseconds. The performance of a resume from connected standby is almost always faster than resuming from the traditional Sleep (S3) state and significantly faster than resuming from the Hibernate (S4) or Shutdown (S5) state.

When the Hyper-V role is enabled on a computer that uses the Always On/Always Connected (AOAC) power model (Surface Pro 3, for example), the Connected Standby power state is now available and works as expected.

How can you improve the next version of Windows Server?

Vote and post at http://windowsserver.uservoice.com/forums/295050-virtualization

i’ll be glad once i can use vhdx on storage spaces with file integrity on so vhdx files can be protected against bitrot.

This is an awesome post. Thanks for going so in-depth with Hyper V. We are talking about new features too on this podcast: http://blog.directionstraining.com/microsoft-windows-server-2016/top-3-fun-new-features-of-windows-server-2016

Reblogged this on Ben Amara Seif Allah Blog.

Great readd